Declarative Shadow DOM

Tuesday, 24 December 2024

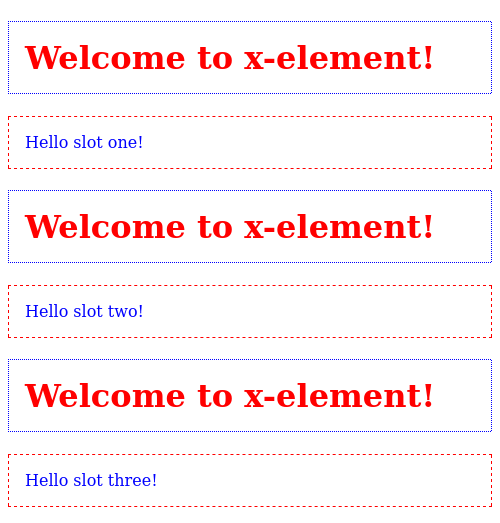

The web platform continues to grow, and features continue to land as baseline. One that I have been anticipating being widely supported across the latest browsers has been declarative shadow DOM. In essence, it enables Web Components to be server-side rendered and shown on the client without JavaScript. Check out the example below, where shadow DOM is used both declaratively with HTML and imperatively with JavaScript.

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<title>Declarative Shadow DOM</title>

</head>

<body>

<style>

p {

color: blue;

border: dashed 0.0625rem red;

padding: 1rem;

}

</style>

<x-element>

<template shadowrootmode="open">

<style>

h1 {

color: red;

border: dotted 0.0625rem blue;

padding: 1rem;

}

</style>

<h1>Welcome to x-element!</h1>

<slot></slot>

</template>

<p>Hello slot one!</p>

</x-element>

<x-element><p>Hello slot two!</p></x-element>

<script type="module">

const tagName = "x-element";

if (!customElements.get(tagName)) {

customElements.define(

tagName,

class extends HTMLElement {

constructor() {

super();

if (!this.shadowRoot) {

const shadow = this.attachShadow({ mode: "open" });

const previousShadowRoot =

document.querySelector(tagName)?.shadowRoot;

if (previousShadowRoot) {

shadow.innerHTML = previousShadowRoot.innerHTML;

}

}

}

}

);

}

const xApp = document.createElement(tagName);

const paragraph = document.createElement("p");

paragraph.append("Hello slot three!");

xApp.append(paragraph);

document.body.append(xApp);

</script>

</body>

</html>